For companies in the early stages of grappling with big data, the analytic lifecycle (model building, deployment, maintenance) can be daunting. In earlier posts I highlighted some new tools that simplify aspects of the analytic lifecycle, including the early phases of model building. But while tools are allowing companies to offload routine analytic tasks to business analysts, experienced modelers are still needed to fine-tune and optimize, mission-critical algorithms.

Model Selection: Accuracy and other considerations

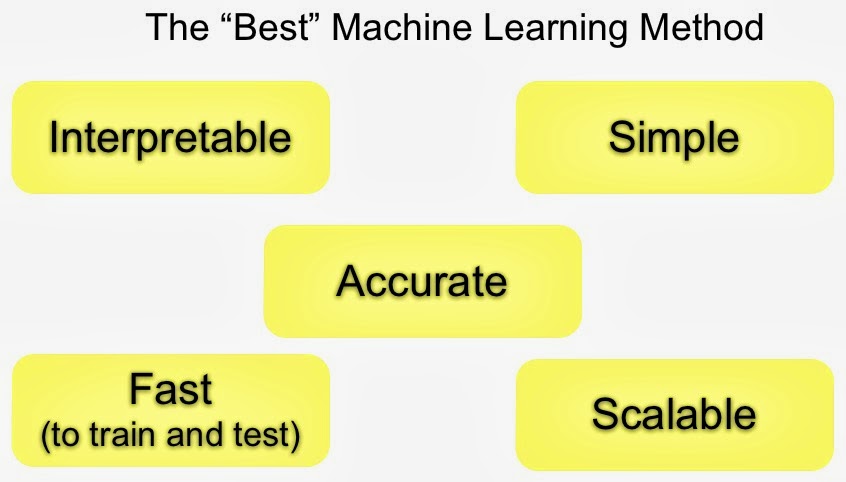

Accuracy1 is the main objective and a lot of effort goes towards raising it. But in practice tradeoffs have to be made, and other considerations play a role in model selection. Speed (to train/score) is important if the model is to be used in production. Interpretability is critical if a model has to be explained for transparency2 reasons (“black-boxes” are always an option, but are opaque by definition). Simplicity is important for practical reasons: if a model has “too many knobs to tune” and optimizations have to be done manually, it might be too involved to build and maintain it in production3.

Chances are a model that’s fast, easy to explain (interpretable), and easy to tune (simple), is less4 accurate. Experienced model builders are valuable precisely because they’ve weighed these tradeoffs across many domains and settings. Unfortunately not many companies have the experts that can identify, build, deploy, and maintain models at scale. (An example from Google illustrates the kinds of issues that can come up.)

Fast and/or scalable

scikit-learn is a popular, well-documented package with models that leverage IPython’s distributed computing features. Over time MLbase will include an optimizer that simplifies model selection for a variety of machine-learning tasks. Depending on their performance requirements, scikit-learn and MLbase users may still need to recode models to speed them up for production. Solutions like VW, H20, and wise.io give users access to fast and scalable implementations of a few algorithms: in the case of wise.io, the company focuses only on Random Forest. Statistics software vendors like Revolution Analytics, SAS, and SPSS have been introducing scalable algorithms and tools for managing the analytic lifecycle.

The creators of a popular open source, machine-learning project recently launched a startup, focused on building commercial-grade options and tools. GraphLab provides fast toolkits for a range of problems including collaborative filtering, clustering, text mining (topic models), and graph analytics. Having had a chance to play with an early version of their IPython interface, I think that it will really open up their libraries to a much broader user base.

Scaling and speeding up accurate algorithms

One startup has been particularly focused on speeding up a wide range of machine learning methods. Skytree offers highly optimized, distributed5 algorithms for all the standard machine-learning tasks6. The company has proprietary implementations of highly accurate algorithms (e.g., kernel density estimation or k-nearest neighbors), that are fast and that scale to very large7 data sets (Skytree integrates and is used with Hadoop). By giving access to a broader set of methods, Skytree enables users to make decisions across different factors (accuracy, speed, simplicity, etc.).

To assist users in navigating their library of algorithms, Skytree organizes them by tasks and industry solutions. Early this week the company announced a program called Second Opinion, that pairs users with Skytree experts, testing techniques and tools. Sometime in the near future, the company’s library of algorithms will be accessible via Skytree Adviser – an intuitive interface that empowers business analysts to handle advanced analytics. Among other things, Skytree Adviser automatically matches machine learning methods to users’ data sets. Once that happens, ordinary users will have fast, scalable, and accurate algorithms at their disposal.

Related posts:

- Data scientists tackle the analytic lifecycle

- Data Analysis: Just one component of the Data Science workflow

- Data analysis tools target non-experts

(1) “Accuracy” depends on the task – for a classifier it means suggesting the correct category, for regression it means coming close to the correct value …

(2) Some examples: in finance, consumers need to know why their credit rating dropped; in health care, diagnostic tools need to tease out key variables.

(3) As I pointed out in the my post on the analytic lifecycle, a certain amount of “wash, rinse, repeat” takes place.

(4) Generally speaking (but not always): Parametric models are simpler, faster, and more interpretable. OTOH, non-parametric models (nearest neighbors, kernel density estimation, SVM, Random Forest ) are more accurate. I appreciate the notion of the Unreasonable Effectiveness of Data (focus on amassing data, and simple machine learning methods will suffice). But note that it doesn’t preclude work on making algorithms faster and more efficient, while maintaining accuracy (as a recent example from Google highlights).

(5) Skytree’s algorithms also work well on a single beefy server.

(6) This includes clustering, classification, regression (prediction), density estimation, dimension reduction, and multivariate matching (finding similar items). According to Skytree CEO Martin Hack the three most popular uses of their software are recommendations, predictions, and outlier detection.

(7) Skytree powers a recommendation system for a customer with 250 million users.

O’Reilly Strata Conference — Strata brings together the leading minds in data science and big data — decision makers and practitioners driving the future of their businesses and technologies. Get the skills, tools, and strategies you need to make data work.

O’Reilly Strata Conference — Strata brings together the leading minds in data science and big data — decision makers and practitioners driving the future of their businesses and technologies. Get the skills, tools, and strategies you need to make data work.

Strata + Hadoop World: October 28-30 | New York, NY

Strata in London: November 15-17 | London, England

Strata in Santa Clara: February 11-13 | Santa Clara, CA